GOSIM Schedule

may 6th

Schedule

AI & Agents

World leading researchers sharing insights on AI and Agents

9:00

9:40

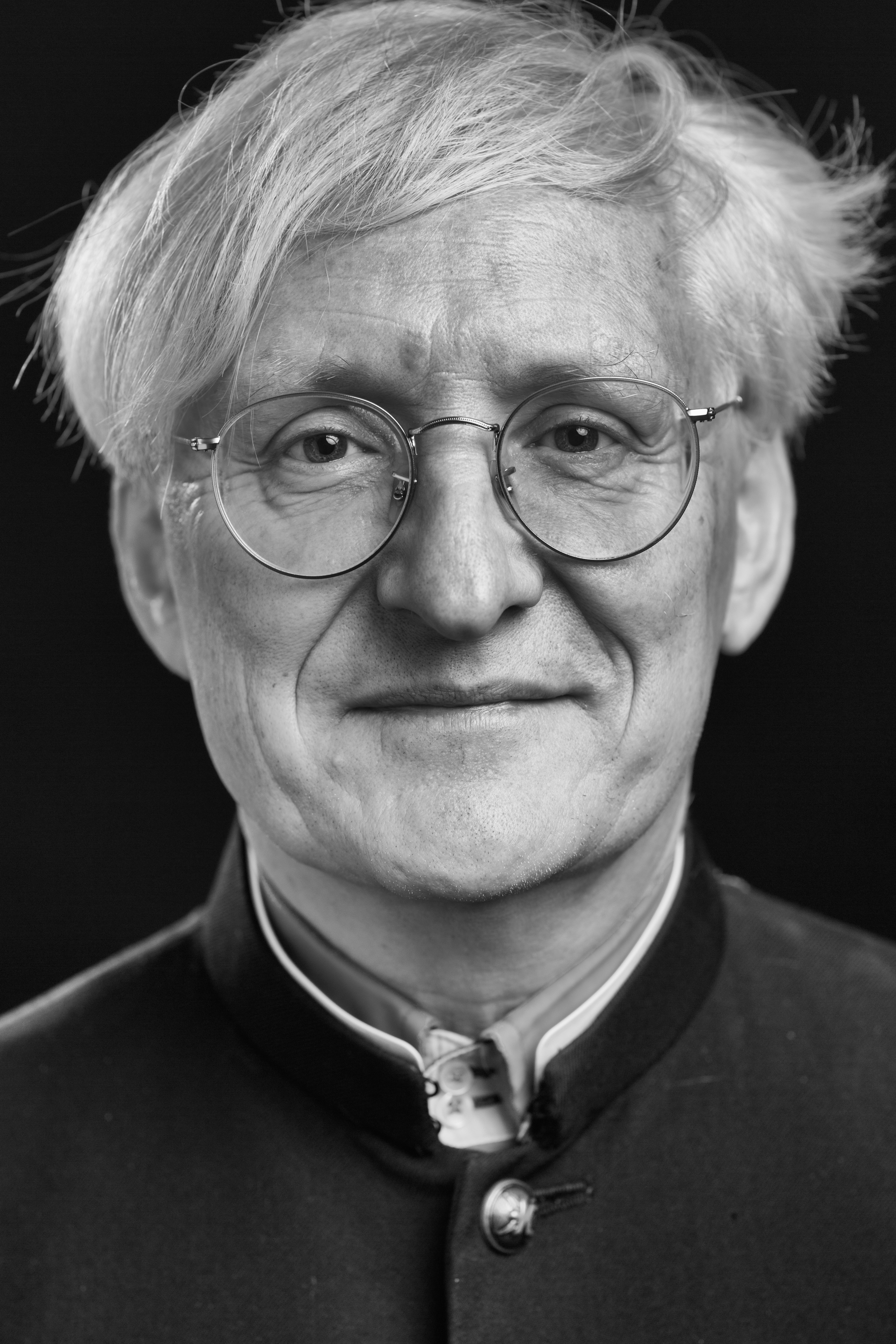

Opening Keynote: The End of the Paper Internet

Opening Keynote: The End of the Paper Internet

Whenever new technologies

are introduced, for a time they imitate the

old technology they are replacing before evolving into their true

form. The introduction of book printing changed the world: information

became more available, and much more affordable, and a whole new

infrastructure and economy was created for the distribution of

information. It also changed the ownership of information, from the church, who

were the source of all books before the printing press, and this in

turn created great turmoil as new thought patterns emerged. But for 50 years after the

printing press was invented, books imitated

manuscripts, before finally becoming what we now understand as books. The internet in

Europe is 36 years old this year. In very similar ways

it has changed the world: information is more available and cheaper,

and there is a whole new infrastructure and economy to support it.

Similarly it is creating a new turmoil as we deal with the changing

way that people obtain information. But also similarly, the internet is still imitating

pre-internet

media: it could be described as paper documents without the paper;

only the paper has been digitised away. So what should the internet really be like, and

can we expect it to

emerge in the next 15 years?

10:00

Fostering Responsible AI: Empowering Openness and Community Collaboration

Fostering Responsible AI: Empowering Openness and Community Collaboration

In the realm of Generative

Artificial Intelligence (GenAI), the pursuit of responsible innovation hinges upon a

steadfast dedication to openness and community collaboration. This talk illuminates the

indispensable role of these principles in guiding GenAI development towards ethical and

accountable outcomes.

We delve into the transformative influence of openness and community collaboration on the

very fabric of GenAI systems. Through the exchange of knowledge, diverse viewpoints, and

collective wisdom, we navigate the landscape of building AI systems with transparency,

thereby laying a solid groundwork for responsible AI practices.

Central to our discourse is the introduction of the Model Openness Framework (MOF) crafted

by Generative AI Commons within LF AI and Data. The MOF serves as a ranked classification

system designed to evaluate all machine learning (ML) models, providing a structured

approach to promote transparency and accountability in GenAI development.

Join us on a journey to unlock the full potential of Generative AI through the empowerment

of open collaboration and transparency. Together, we accelerate the process of building AI

systems with openness and community involvement, fostering responsible innovation for the

benefit of all.

10:40

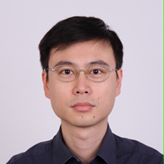

LLM for Coding, the State and Initiative

LLM for Coding, the State and Initiative

An in-depth look at the

technical solutions and challenges of using large language model technology to improve

code productivity. This talk introduces the current situation and experience of Chinese

software developers in this field, explores what software companies and the open-source

community can do toward advancing the automation of software development.

11:20

11:40

OminiX – Unified Acceleration Framework for Both LLM and SD GenAI Models on the

OminiX – Unified Acceleration Framework for Both LLM and SD GenAI Models on the

OminiX is an open-source

project that unifies acceleration framework for both Large Language Model (LLM) and Stable

Diffusion (SD) GenAI models. It includes 1) open cell library to support stable diffusion,

diffusion transformers, and LLMs, 2) acceleration runtime for LLM and SD models such as

LLaMA2 7B, 13B, Mistral 7B, and other variants, and 3) online finetuning runtime for

stable diffusion and large language models.

In this talk, we will show that OminiX runtime will accelerate LLM and SD GenAI

applications on the edge devices.

12:20

Write Once Run Anywhere, But for GPUs

Write Once Run Anywhere, But for GPUs

With the growing popularity

of AI and Large Language Model (LLM) applications, there is an increasing demand for

running and scaling these workloads in the cloud and on edge devices. However, the

reliance on GPUs and hardware accelerators poses challenges for traditional

container-based deployments. Rust and WebAssembly (Wasm) offer a solution by providing a

portable bytecode format that abstracts hardware complexities. LlamaEdge is a lightweight,

high-performance and cross-platform LLM inference runtime. Written in Rust and built on

the WasmEdge runtime, LlamaEdge provides a standard API, known as WASI-NN, to LLM app

developers. Developers only need to write against the API and compile their programs to

Wasm bytecode. The Wasm bytecode file can run on any device, where WasmEdge translates and

routes Wasm calls to the underlying native libraries such as llama.cpp, TensorRT LLM, MLX,

PyTorch, Tensorflow, candle, and burn.rs. The result is very small and portable Wasm apps

that run LLM inference at full native speed across many different devices. In this talk,

we will discuss the design and implementation of LlamaEdge. We will demonstrate how it

enables cross-platform LLM app development and deployment. We will also walk through

several code examples from a basic sentence completion app, to a chat bot, to an RAG agent

app with external knowledge in vector databases, to a Kubernetes managed app across a

heterogeneous cluster of different GPUs and NPUs. The attendees will learn how to run open

source LLMs on their own devices, and more importantly, how to create and deploy their

customized LLM apps using LlamaEdge APIs.

13:00

14:30

dora-rs: LLM Powered Runtime Code Change in Robots

dora-rs: LLM Powered Runtime Code Change in Robots

In this talk, we show how

allowing LLMs to modify robotic codebase at runtime enables new human-machine interaction.

To achieve this, we use dora-rs, a robotic framework capable of changing code at runtime

while keeping state, also known as hot-reloading. By pairing dora-rs with LLMs, we

demonstrate that robots can be controlled and instructed with natural language to modify

any aspect of the robot codebase.

This approach allows new human-robot interactions that were previously inaccessible due to

the limitations posed by the need to use existing predefined interfaces, thus paving the

way to more sophisticated and wider use of robotic applications that can better understand

and respond to human needs.

15:10

Moxin: A Pure Rust Explorer for Open Source LLMs

Moxin: A Pure Rust Explorer for Open Source LLMs

Moxin is an open source tool

that makes it easy for users to explore and experiment with open source LLMs on their own

computers. It is assembled from a collection of loosely coupled building blocks of Rust

and Wasm components.

* A Rust-native chatbot UI

* A cross-platform LLM inference engine based on LlamaEdge. * A Rust native UI for

managing, filtering, and displaying open source LLM models.

* A database and web service for a catalog of open source LLMs. The database provides an

admin dashboard for the community to update those models. * An in-process Rust messaging

channel for the frontend widgets to communicate with the LlamaEdge model service and the

model meta data service. In this talk, we will showcase the Moxin app, discuss its

architecture, and demonstrate how it serves as a template and component library for Rust

developers to create their own cross-platform LLM applications. We will also discuss the

roadmap for this project, including timelines for the model database and plans for

advanced features such as multimodal models and RAG apps. Attendees will learn how to

build rich UI applications and LLM services using Rust and Wasm, and discover how Moxin is

revolutionizing the way users interact with LLMs on their personal computers.

16:00

16:20

Dioxus: AI Driven UI

Dioxus: AI Driven UI

Imagine a world where you

can build beautiful and robust cross platform apps with just a few simple chat commands.

The team behind Dioxus - the popular library for building cross-platform apps in Rust -

will demonstrate how you can build, test, and ship your next app idea with Dioxus AI. In

this talk, we’ll showcase an LLM fine-tuned on Dioxus code that enables you to quickly

iterate on app ideas without having to be an expert in Rust. Our automatic styling tool

leverages LLMs and hot-reloading to improve the look, feel, and accessibility of your app

by generating new Tailwind classes and CSS on the fly. Our quality-assurance AI agent

intelligently walks your app, uncovering bugs and suggests improvements. Our app-builder

studio provides a suite of AI-enabled dev-tools for building new components inspired by

your Figma designs and marketing content. We’ll cover the Rust-based infrastructure

powering these tools including our local-first AI meta-framework Kalosm and Dioxus UI

library. The future of app development is bright, and we’re excited to share our vision

with GOSIM.

17:00

Schedule

App & Web

High performance cross-platform app & web development

9:00

9:40

Opening Keynote: The End of the Paper Internet

Opening Keynote: The End of the Paper Internet

Whenever new technologies

are introduced, for a time they imitate the

old technology they are replacing before evolving into their true

form. The introduction of book printing changed the world: information

became more available, and much more affordable, and a whole new

infrastructure and economy was created for the distribution of

information. It also changed the ownership of information, from the church, who

were the source of all books before the printing press, and this in

turn created great turmoil as new thought patterns emerged. But for 50 years after the

printing press was invented, books imitated

manuscripts, before finally becoming what we now understand as books. The internet in

Europe is 36 years old this year. In very similar ways

it has changed the world: information is more available and cheaper,

and there is a whole new infrastructure and economy to support it.

Similarly it is creating a new turmoil as we deal with the changing

way that people obtain information. But also similarly, the internet is still imitating

pre-internet

media: it could be described as paper documents without the paper;

only the paper has been digitised away. So what should the internet really be like, and

can we expect it to

emerge in the next 15 years?

10:00

The State of Rust GUI

The State of Rust GUI

A review of the state of

"Rust Native" GUI ecosystem. This talk will cover: What is already implemented?

What is being actively worked on in 2024? Which gaps and challenges remain? And what might

future solutions to those gaps and challenges look like? We will also look at structure of

the ecosystem, and how to get involved either as an application developer, a

library/framework developer, or as a company looking to invest in the space.

10:40

OpenHarmony for Next Gen Mobile

OpenHarmony for Next Gen Mobile

HarmonyOS is a new,

independant Operating System developed by Huawei.

While previous versions had an Android compatiblity layer that allowed most Android apps

to run without any modifications, the upcoming 5.0 release drops this layer, forcing

developers to port their applications to the new (Open-)Harmony APIs.

While there is already a huge ongoing effort to port the top 5000 apps in China, this has

been largely unnoticed in Europe.

This talk will introduce OpenHarmony, its relation to HarmonyOS, how app development for

OpenHarmony looks like, and why the target market is more than just Huawei devices.

Using Servo, a Rust-based web rendering engine, as a case study, we delve into the process

of porting existing applications with native Rust code to seamlessly operate on

OpenHarmony.

11:20

11:40

Full Stack Rust With Leptos

Full Stack Rust With Leptos

Rust has proven to be a

strong choice for backend web services, but new and upcoming frameworks like Leptos have

made it a strong choice for building interactive frontend web UIs as well. Come learn why

you might want to build a full stack Rust web app with Leptos, leveraging the power of

Rust to deliver web apps rivaling any other web stack.

12:20

Quake: Bridging the Build System Gap

Quake: Bridging the Build System Gap

The complexity of software

has grown significantly over the past decades, outstripping the build systems that underly

them. Modern software requires build-time support for asset handling, cross-platform

compilation, and more, but this gap is most often filled by hacked together solutions that

add only further technical debt. quake addresses this issue head-on, providing a simple

but expressive interface over the Nushell language that allows any developer to construct

robust, cross-platform build scripts without any magic incantations. Beyond quake itself,

we'll dive deeper into other widely-used build systems and the unique challenges they

face in order to better understand how we got where we are, and what the future could look

like.

13:00

14:30

Wrapping Cargo for Shipping

Wrapping Cargo for Shipping

Follow along as we explore

the road a Rust Application takes from source into application stores, onto customer

devices. We will discuss how we can post-process Cargo artifacts to integrate them into

Windows, macOS, mobile, and more. Followingly, we will attempt to please the Apple Store

Validation, the Microsoft

Audits, and the scrutiny of Linux Distributors, so our Rust Application will reach the

distribution channels our users expect and deserve. At last, we will look at the Osiris

Project, which is the home of the tooling we use and strives to document this process.

15:10

Modular Servo: Three Paths Forward

Modular Servo: Three Paths Forward

From the start, about ten

years ago, Servo was meant to be a modular web engine. What does this mean, Where do we

stand today, and where are we going? Three distinct paths will be discussed: the embedding

layer, independent projects using parts of Servo, and Servo integrating independent

projects.

16:00

16:20

The Oniro Platform

The Oniro Platform

Oniro is an Eclipse

Foundation Project dedicated to the development of an open source vendor-neutral Operating

System (OS) platform. The Oniro Project was established through a collaboration between

two global open source foundations: The Eclipse Foundation and The OpenAtom Foundation.

Leveraging the solid foundation of OpenHarmony, an open source project operated by the

OpenAtom Foundation, Oniro builds upon an operating system platform known for its

versatility across a wide range of smart devices. The talk will cover how Oniro approaches

Mobile and IoT and the challenges behind it

17:00

Schedule

9:00

9:40

Opening Keynote: The End of the Paper Internet

Opening Keynote: The End of the Paper Internet

Whenever new technologies are

introduced, for a time they imitate the

old technology they are replacing before evolving into their true

form. The introduction of book printing changed the world: information

became more available, and much more affordable, and a whole new

infrastructure and economy was created for the distribution of

information. It also changed the ownership of information, from the church, who

were the source of all books before the printing press, and this in

turn created great turmoil as new thought patterns emerged. But for 50 years after the

printing press was invented, books imitated

manuscripts, before finally becoming what we now understand as books. The internet in

Europe is 36 years old this year. In very similar ways

it has changed the world: information is more available and cheaper,

and there is a whole new infrastructure and economy to support it.

Similarly it is creating a new turmoil as we deal with the changing

way that people obtain information. But also similarly, the internet is still imitating

pre-internet

media: it could be described as paper documents without the paper;

only the paper has been digitised away. So what should the internet really be like, and

can we expect it to

emerge in the next 15 years?

10:00

The Current State of the Fediverse

The Current State of the Fediverse

A broad overview of what is

happening in the space of the fediverse, that goes beyond just what the well-known names

of Mastodon and Threads are doing:

- what are the fediverse-native players doing in the space (such as Mastodon and PeerTube)

- what are the tech platforms doing that are (working on) integrating ActivityPub doing

and thinking about (such as Threads and Flipboard)

- what are some other types of projects working on with ActivityPub (zooming in on

podcasts and forums with PodcastIndex, Discourse and NodeBB)

10:40

Entering the World of Fediverse: Messaging with Matrix

Entering the World of Fediverse: Messaging with Matrix

Matrix 2.0 is the next big

evolution of Matrix - a set of new APIs which provide instant login, instant sync and

instant launch; native support for OpenID Connect; native E2EE group VoIP and general

performance improvements to ensure that Matrix-based communication apps can outperform the

mainstream proprietary centralized alternatives. In this talk, we’ll explain how Matrix

2.0 is progressing, and how matrix-rust-sdk has become the flagship Matrix client SDK from

the core team, using the safety and performance of Rust to provide a gold standard SDK

implementation for the benefit of all.

11:20

11:40

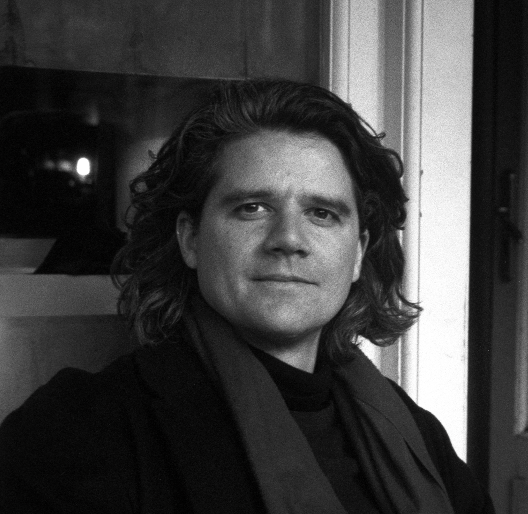

Using Cryptography to Decentralize Identity on the Fediverse

Using Cryptography to Decentralize Identity on the Fediverse

The Fediverse is a social

network with a model that gives the people a lot of freedom: choose your platform, choose

your instance, and even set up your own instance. One can easily migrate, by choice or due

to an instance shutting down. This freedom comes with a catch: identity is distributed and

can be volatile, which is a benefit for some and a downside for others. The solution is to

maintain an online identity separate from one’s social accounts. This talk explains how

Keyoxide enables people to create and maintain their own online identity in a

decentralized manner and how cryptography is used to prevent impersonation.

12:20

Robrix: A Multi-Platform Matrix & Fediverse Hub

Robrix: A Multi-Platform Matrix & Fediverse Hub

Robrix is (currently) a new

Matrix chat client application written in Rust to demonstrate and drive the featureset of

Project Robius, a multi-platform app dev framework. Thanks to the efforts of the Robius

software stack, and in particular the Makepad UI toolkit, Robrix runs seamlessly across

Android, iOS, macOS, Linux, and Windows (with web and OpenHarmony to come), all without a

single line of platform-specific code.

This talk will cover the general architecture and features of Robrix, our experience

developing apps in Rust and the challenges encountered therein, and how Robrix's

needs have driven the development of ecosystem components.

Finally, we'll lay out our future vision for Robrix as an open-source "hub"

app, bringing together many aspects of the fediverse beyond Matrix chat: decentralized

social networks, news aggregators and forums, code views for git hosts, and the

integration of AI features via local LLMs.

https://github.com/gosimfoundation/europe2024/blob/main/presentations/fediverse/Kevin_Boos-Robrix_Talk_GOSIM_Europe_May_6_2024_condensed_size.pdf

13:00

14:30

Mega - Decentralized Open Source Collaboration for Source Code & LLM

Mega - Decentralized Open Source Collaboration for Source Code & LLM

Mega is a groundbreaking

monorepo and monolithic codebase management system, particularly regarding source code and

large language models (LLMs) management. Mega's decentralized network of services

harnesses the Git and Git LFS protocols, thus fostering an inclusive development ecosystem

and bolstering data integrity. Its integrative capabilities encompass advanced messaging

protocols such as Matrix and Nostr, facilitating decentralized communication and

collaboration. Mega revolutionizes open-source cooperation by offering a flexible, secure,

and inclusive platform, empowering developers globally.

15:10

Do You Know Who Wrote Your Software?

Do You Know Who Wrote Your Software?

In open source projects, it

is quite common for software to consist of 100’s and 100’s of dependencies. This is a

inherently a sign of proper collaboration in a healthy ecosystem. But in today’s world, it

also poses a risk when we are seeking assurances about the pedigree of our software. As

the thwarted attack on XZ Utils clearly shows, we need to pay more attention to our supply

chain. In this presentation I want to raise awareness of this challenge from the

perspective of a Rust developer, using the notions of the “burden problem” and the “trust

problem”, and touching on various issues such as software distribution, version

management, open source licensing and build time security.

16:00

16:20

Building the Linux for Payments - An Hourglass Approach

Building the Linux for Payments - An Hourglass Approach

Federated payments systems

across the world are very complex and diverse. Such payment systems could become like a

utility, like air or water - because payments are nothing but moving bytes to enable the

flow of money between entities. For this to actually happen, the supporting software for

payments should become a utility. But, in the current world -

Small businesses cannot afford to build a payments infrastructure which a large business

can afford to build inhouse

Lot of repeated work happens in silos to innovate upon federated payment systems and in

connecting digital applications to the payment building blocks

Diversity of the payments system spanning - banks, payment processors, country specific

card networks, regulations, payment methods keeps growing, Hence this is the right time

for a Global Unifier to bring in the Hourglass approach into payments, similar to Linux;

or like the internet protocol. For such a Global Unifier to succeed in the Federated

payments world - on one hand it has to be Open and Community Driven. And on the other hand

it has to embrace Capitalism and be bound to Regulations.